Trace

Why We Moved from SQLite to DuckDB: 5x Faster Queries, ~80% Less Storage

From the start, Trace has used SQLite to store all its data locally on the user’s device. It has been a great choice—highly reliable and widely supported across multiple languages. After all, it is the world’s most deployed database.

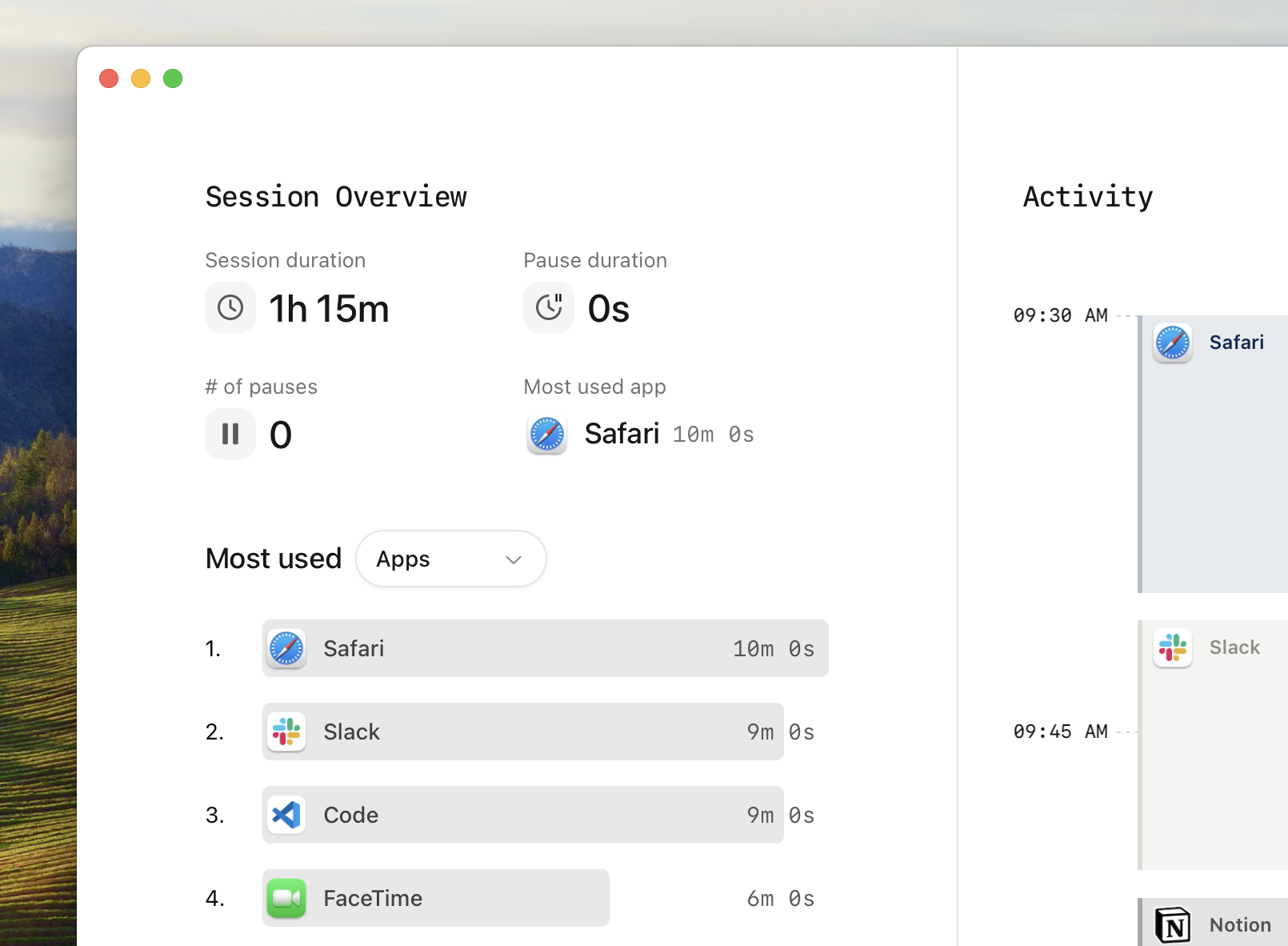

For those unfamiliar, Trace is a macOS time tracking app that monitors how you spend your time on your Mac. Given its purpose, Trace can process a significant amount of data, making it a data-intensive application. Although SQLite served us well, we faced two key challenges:

- Over time, the SQLite database file can grow significantly in size.

- As the dataset expands, aggregate queries start to slow down.

We’ve looked at many ways to optimize SQLite, but then stumbled upon DuckDB: a similar embeddable database system that is optimized for analytical workloads. After testing DuckDB for some time, it proved itself to be a much better fit for our time tracking software than SQLite.

Why DuckDB

We needed a database system that could be embedded into our app while being fast and reliable. Both SQLite and DuckDB met these criteria. However, we found DuckDB to be 3-5 times faster at executing our analytical queries. This factor only grew as we increased the data volume.

Our largest queries invole multiple joins across large tables and views while heavily relying on aggregate SQL functions. Given this workload, it’s no surprise that DuckDB outperformed SQLite in this area.

From DuckDB’s docs:

DuckDB is designed to support analytical query workloads, also known as online analytical processing (OLAP).

…

To efficiently support this workload, it is critical to reduce the amount of CPU cycles that are expended per individual value. The state of the art in data management to achieve this are either vectorized or just-in-time query execution engines. DuckDB uses a columnar-vectorized query execution engine, where queries are still interpreted, but a large batch of values (a "vector") are processed in one operation. This greatly reduces overhead present in traditional systems such as PostgreSQL, MySQL or SQLite which process each row sequentially. Vectorized query execution leads to far better performance in OLAP queries.

Slides from Gábor Szárnyas's DuckDB talk at GOTO 2024

While SQLite processes rows sequentially, DuckDB uses a columnar-vectorized execution engine that processes large batches of values simultaneously. This approach is particularly effective for analytical workloads like ours.

To put this in perspective, benchmarks like ClickBench have shown DuckDB performing up to 1200 times faster than SQLite for certain analytical queries.

Although we saw significantly faster read performance with DuckDB for our workflow, it doesn’t perform as well as SQLite for single-row operations. In our testing, SQLite was about twice as fast as DuckDB in these cases.

That said, another key factor that led us to switch was DuckDB’s automatic on-disk compression, which drastically reduces storage space.

To illustrate the difference, we created a table similar to one used in our app, containing two timestamp columns, a primary integer ID, and a boolean column. Inserting 1,000,000 rows resulted in a 101.6 MB SQLite database file, while DuckDB required only 23.1 MB—a 77% reduction in data size.

Migration Process

The migration process wasn’t very smooth. SQLite has much bigger ecosystem across programming languages than DuckDB. In Trace, most of our codebase in written in Rust, and we used the Diesel ORM to handle database interactions.

DuckDB does have its own Rust crate, duckdb-rs, which is no ORM by any means. Therefore, we had to rewrite major parts of our codebase and implement our own database migration system.

During our re-write, we’ve also encountered some of the concurrency limitations of DuckDB. Previously when using SQLite, we’d open multiple concurrent connections that can perform operations on the same database table. With DuckDB, this type of workflow caused a lot of data loss as data from one connection would be saved, leaving the data from the other connection totally lost. The work-around to this type of issue wasn’t very difficult. We just had to make sure that only one thread can mutate a table at a given time.

Another thing to note is that bundling DuckDB with our app nearly doubled our bundle size from 26mb to 44mb. That is a significant increase, but it was definitely worth it for us.

Are we done with SQLite?

No. While DuckDB excels at analytical workloads, we still rely on SQLite for some core operations. SQLite is better suited for handling frequent, small transactions, as it is lightweight, fast for simple queries, and supports concurrent connections more effectively.

Overview

Since migrating over to DuckDB:

- Our read queries are at-least 3-5x faster (making our dashboards much faster as well)

- Our database file takes ~80% less storage space

- Our app's bundle size nearly doubled (26mb to 44mb)

Overall, we are very happy with this migration, and are excited to be using DuckDB moving forward.

Trace